| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| 13 | 14 | 15 | 16 | 17 | 18 | 19 |

| 20 | 21 | 22 | 23 | 24 | 25 | 26 |

| 27 | 28 | 29 | 30 |

- Java

- Kafka

- TensorFlow

- license delete

- query

- 파이썬

- docker

- MySQL

- plugin

- flask

- ELASTIC

- aggs

- API

- Python

- matplotlib

- analyzer test

- 900gle

- Test

- token filter test

- Elasticsearch

- licence delete curl

- Mac

- License

- aggregation

- springboot

- zip 암호화

- zip 파일 암호화

- 차트

- high level client

- sort

- Today

- Total

개발잡부

[kafka] MacOs Kafka install / test 본문

kafka를 설치 해보자

https://www.apache.org/dyn/closer.cgi?path=/kafka/2.8.0/kafka_2.13-2.8.0.tgz

Apache Download Mirrors

Copyright © 2020 The Apache Software Foundation, Licensed under the Apache License, Version 2.0. Apache and the Apache feather logo are trademarks of The Apache Software Foundation.

www.apache.org

#다운로드

wget https://archive.apache.org/dist/kafka/2.8.0/kafka_2.13-2.8.0.tgz

#압축해제

tar xvf kafka_2.13-2.8.0.tgz

zookeeper 실행

cd /Users/doo/kafka/kafka_2.13-2.8.0

bin/zookeeper-server-start.sh -daemon config/zookeeper.propertieskafka 실행

bin/kafka-server-start.sh -daemon config/server.properties

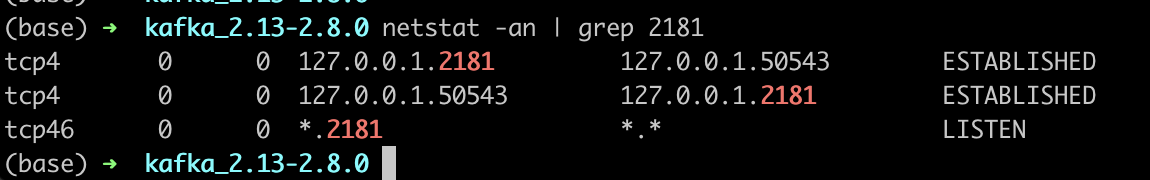

확인

netstat -an | grep 2181

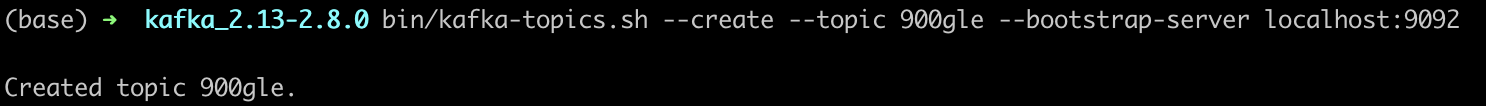

토픽생성

#bin/kafka-topics.sh --create --topic quickstart-event --bootstrap-server localhost:9092

bin/kafka-topics.sh --create --topic 900gle --bootstrap-server localhost:9092

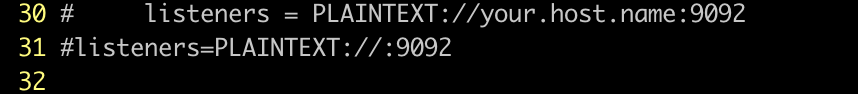

Network 에러 나면

주석제거

listeners=PLAINTEXT://:9092

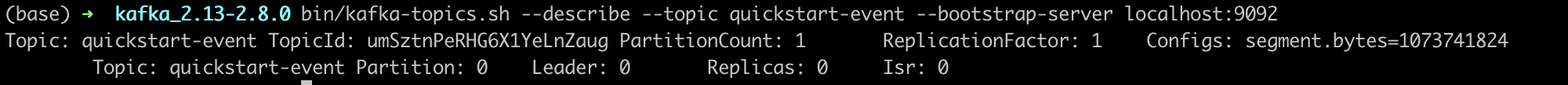

토픽 확인

bin/kafka-topics.sh --describe --topic 900gle --bootstrap-server localhost:9092

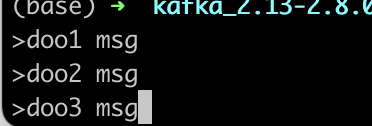

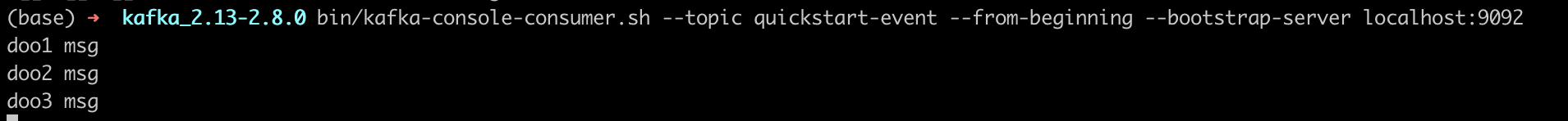

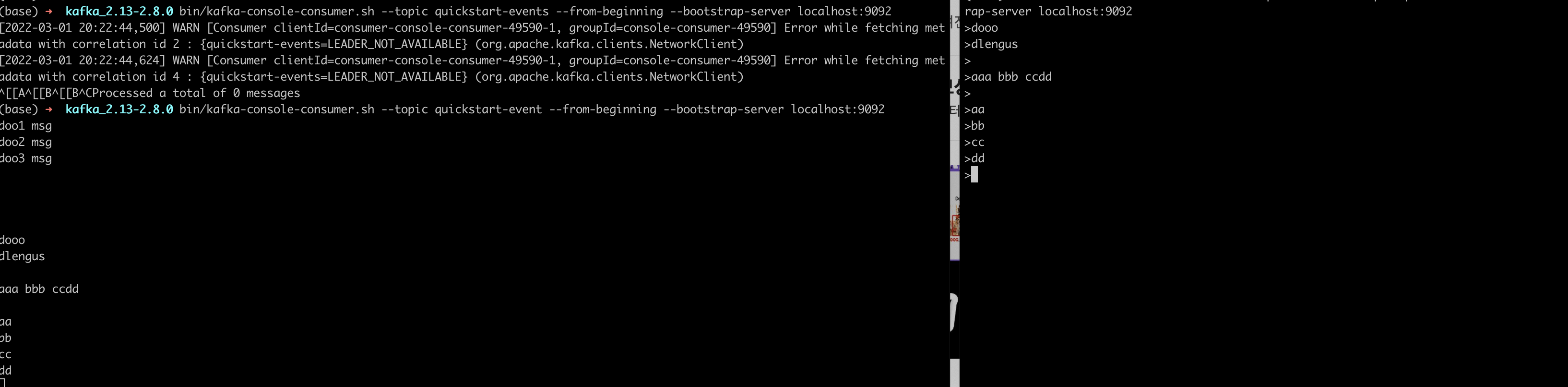

producer 실행

bin/kafka-console-producer.sh --topic 900gle --bootstrap-server localhost:9092

consumer 실행

bin/kafka-console-consumer.sh --topic 900gle --from-beginning --bootstrap-server localhost:9092

producer 에서 메시지 입력하면 consumer 에서 보여진다

좌 : consumer, 우 : producer

https://github.com/yahoo/CMAK/releases

Releases · yahoo/CMAK

CMAK is a tool for managing Apache Kafka clusters. Contribute to yahoo/CMAK development by creating an account on GitHub.

github.com

2. 다운로드 후 압축 해제

$ tar -xzvf CMAK-1.3.3.23.tar.gz

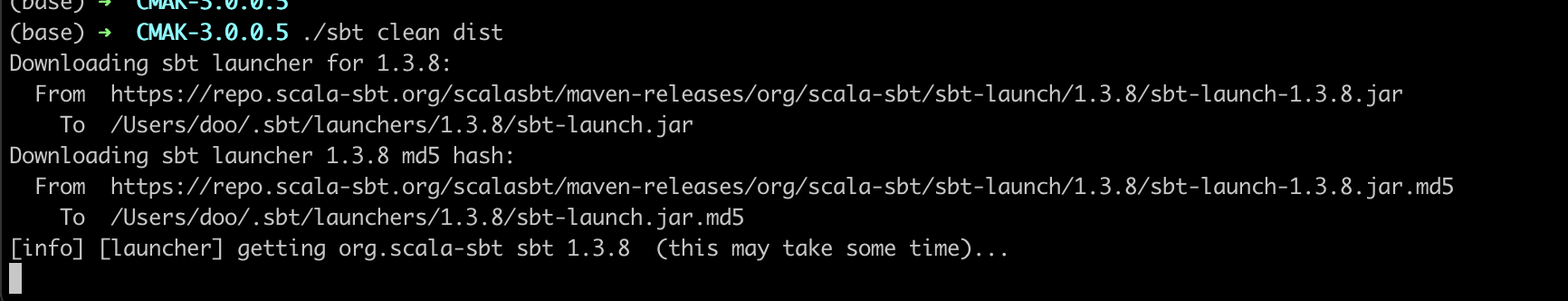

3. sbt 실행

sbt : Simple Build Tool, 스칼라를 위한 빌드 툴

압축해제된 폴더에서 sbt 실행

$ cd CMAK-3.0.0.5/

$ ./sbt clean dist

4. kafka-manager-3.0.0.5.zip 파일 압축 해제

sbt 실행 후 만들어진 압축폴더를 적당한 위치에 압축을 풀어준다.

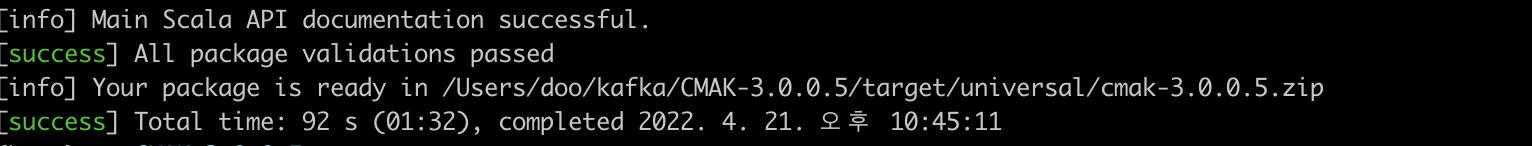

압축폴더의 경로는 sbt 실행후 성공했다는 로그를 참고하면 된다

로그

[info] Your package is ready in /Users/doo/kafka/CMAK-3.0.0.5/target/universal/cmak-3.0.0.5.zip

압축 해제 실행

$ unzip -d cmak-3.0.0.5.zipRequirements

Configuration

The minimum configuration is the zookeeper hosts which are to be used for CMAK (pka kafka manager) state. This can be found in the application.conf file in conf directory. The same file will be packaged in the distribution zip file; you may modify settings after unzipping the file on the desired server.

cmak.zkhosts="my.zookeeper.host.com:2181"

You can specify multiple zookeeper hosts by comma delimiting them, like so:

cmak.zkhosts="my.zookeeper.host.com:2181,other.zookeeper.host.com:2181"

Alternatively, use the environment variable ZK_HOSTS if you don't want to hardcode any values.

ZK_HOSTS="my.zookeeper.host.com:2181"

You can optionally enable/disable the following functionality by modifying the default list in application.conf :

application.features=["KMClusterManagerFeature","KMTopicManagerFeature","KMPreferredReplicaElectionFeature","KMReassignPartitionsFeature"]

- KMClusterManagerFeature - allows adding, updating, deleting cluster from CMAK (pka Kafka Manager)

- KMTopicManagerFeature - allows adding, updating, deleting topic from a Kafka cluster

- KMPreferredReplicaElectionFeature - allows running of preferred replica election for a Kafka cluster

- KMReassignPartitionsFeature - allows generating partition assignments and reassigning partitions

Consider setting these parameters for larger clusters with jmx enabled :

- cmak.broker-view-thread-pool-size=< 3 * number_of_brokers>

- cmak.broker-view-max-queue-size=< 3 * total # of partitions across all topics>

- cmak.broker-view-update-seconds=< cmak.broker-view-max-queue-size / (10 * number_of_brokers) >

Here is an example for a kafka cluster with 10 brokers, 100 topics, with each topic having 10 partitions giving 1000 total partitions with JMX enabled :

- cmak.broker-view-thread-pool-size=30

- cmak.broker-view-max-queue-size=3000

- cmak.broker-view-update-seconds=30

The follow control consumer offset cache's thread pool and queue :

- cmak.offset-cache-thread-pool-size=< default is # of processors>

- cmak.offset-cache-max-queue-size=< default is 1000>

- cmak.kafka-admin-client-thread-pool-size=< default is # of processors>

- cmak.kafka-admin-client-max-queue-size=< default is 1000>

You should increase the above for large # of consumers with consumer polling enabled. Though it mainly affects ZK based consumer polling.

Kafka managed consumer offset is now consumed by KafkaManagedOffsetCache from the "__consumer_offsets" topic. Note, this has not been tested with large number of offsets being tracked. There is a single thread per cluster consuming this topic so it may not be able to keep up on large # of offsets being pushed to the topic.

Authenticating a User with LDAP

Warning, you need to have SSL configured with CMAK (pka Kafka Manager) to ensure your credentials aren't passed unencrypted. Authenticating a User with LDAP is possible by passing the user credentials with the Authorization header. LDAP authentication is done on first visit, if successful, a cookie is set. On next request, the cookie value is compared with credentials from Authorization header. LDAP support is through the basic authentication filter.

- Configure basic authentication

- basicAuthentication.enabled=true

- basicAuthentication.realm=< basic authentication realm>

- Encryption parameters (optional, otherwise randomly generated on startup) :

- basicAuthentication.salt="some-hex-string-representing-byte-array"

- basicAuthentication.iv="some-hex-string-representing-byte-array"

- basicAuthentication.secret="my-secret-string"

- Configure LDAP/LDAPS authentication

- basicAuthentication.ldap.enabled=< Boolean flag to enable/disable ldap authentication >

- basicAuthentication.ldap.server=< fqdn of LDAP server>

- basicAuthentication.ldap.port=< port of LDAP server>

- basicAuthentication.ldap.username=< LDAP search username>

- basicAuthentication.ldap.password=< LDAP search password>

- basicAuthentication.ldap.search-base-dn=< LDAP search base>

- basicAuthentication.ldap.search-filter=< LDAP search filter>

- basicAuthentication.ldap.connection-pool-size=< number of connection to LDAP server>

- basicAuthentication.ldap.ssl=< Boolean flag to enable/disable LDAPS>

- (Optional) Limit access to a specific LDAP Group

- basicAuthentication.ldap.group-filter=< LDAP group filter>

- basicAuthentication.ldap.ssl-trust-all=< Boolean flag to allow non-expired invalid certificates>

Example (Online LDAP Test Server):

- basicAuthentication.ldap.enabled=true

- basicAuthentication.ldap.server="ldap.forumsys.com"

- basicAuthentication.ldap.port=389

- basicAuthentication.ldap.username="cn=read-only-admin,dc=example,dc=com"

- basicAuthentication.ldap.password="password"

- basicAuthentication.ldap.search-base-dn="dc=example,dc=com"

- basicAuthentication.ldap.search-filter="(uid=$capturedLogin$)"

- basicAuthentication.ldap.group-filter="cn=allowed-group,ou=groups,dc=example,dc=com"

- basicAuthentication.ldap.connection-pool-size=10

- basicAuthentication.ldap.ssl=false

- basicAuthentication.ldap.ssl-trust-all=false

Deployment

The command below will create a zip file which can be used to deploy the application.

./sbt clean dist

Please refer to play framework documentation on production deployment/configuration.

If java is not in your path, or you need to build against a specific java version, please use the following (the example assumes zulu java11):

$ PATH=/usr/lib/jvm/zulu-11-amd64/bin:$PATH \

JAVA_HOME=/usr/lib/jvm/zulu-11-amd64 \

/path/to/sbt -java-home /usr/lib/jvm/zulu-11-amd64 clean dist

This ensures that the 'java' and 'javac' binaries in your path are first looked up in the correct location. Next, for all downstream tools that only listen to JAVA_HOME, it points them to the java11 location. Lastly, it tells sbt to use the java11 location as well.

Starting the service

After extracting the produced zipfile, and changing the working directory to it, you can run the service like this:

$ bin/cmak

By default, it will choose port 9000. This is overridable, as is the location of the configuration file. For example:

$ bin/cmak -Dconfig.file=/path/to/application.conf -Dhttp.port=8080

Again, if java is not in your path, or you need to run against a different version of java, add the -java-home option as follows:

$ bin/cmak -java-home /usr/lib/jvm/zulu-11-amd64

Starting the service with Security

To add JAAS configuration for SASL, add the config file location at start:

$ bin/cmak -Djava.security.auth.login.config=/path/to/my-jaas.conf

NOTE: Make sure the user running CMAK (pka kafka manager) has read permissions on the jaas config file

Packaging

If you'd like to create a Debian or RPM package instead, you can run one of:

sbt debian:packageBin

sbt rpm:packageBin

Credits

Most of the utils code has been adapted to work with Apache Curator from Apache Kafka.

Name and Management

CMAK was renamed from its previous name due to this issue. CMAK is designed to be used with Apache Kafka and is offered to support the needs of the Kafka community. This project is currently managed by employees at Verizon Media and the community who supports this project.

License

Licensed under the terms of the Apache License 2.0. See accompanying LICENSE file for terms.

Consumer/Producer Lag

Producer offset is polled. Consumer offset is read from the offset topic for Kafka based consumers. This means the reported lag may be negative since we are consuming offset from the offset topic faster then polling the producer offset. This is normal and not a problem.

Migration from Kafka Manager to CMAK

- Copy config files from old version to new version (application.conf, consumer.properties)

- Change start script to use bin/cmak instead of bin/kafka-manager

'Kafka' 카테고리의 다른 글

| Error response from daemon: Container 2c8318196be216b730907022eccaffc314fdd7e5d8e0286ea3f6b1d219acd5c0 is restarting, wait until the container is running (0) | 2022.08.27 |

|---|---|

| [kafka] docker dompose multi broker kafka 3.6 (0) | 2022.08.27 |

| [kafka] docker dompose multi broker kafka (0) | 2022.08.26 |

| [kafka] docker compose kafka (0) | 2022.05.08 |

| [kafka] CMAK 3.0.0.5 설치 for Mac OS (0) | 2022.04.27 |