| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | |||

| 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| 12 | 13 | 14 | 15 | 16 | 17 | 18 |

| 19 | 20 | 21 | 22 | 23 | 24 | 25 |

| 26 | 27 | 28 | 29 | 30 | 31 |

Tags

- zip 파일 암호화

- query

- ELASTIC

- analyzer test

- TensorFlow

- API

- Mac

- 파이썬

- licence delete curl

- Python

- License

- 차트

- sort

- high level client

- token filter test

- springboot

- matplotlib

- flask

- 900gle

- license delete

- MySQL

- Test

- Java

- Elasticsearch

- Kafka

- aggregation

- docker

- plugin

- zip 암호화

- aggs

Archives

- Today

- Total

개발잡부

[python] 데이터검증 본문

반응형

키워드별 속성 정보를 수정했는데..

as-is 와 to-be 의 데이터가 일치 해야 하는지 검증이 필요하다.

- 전체카운트를 구하고

- 키워드를 추출해서

- 키워드별 속성을 집계(aggregation)

- 결과를 파일로 추출

doo 의 가상환경으로

conda activate doo

ed /Users/doo/doo_py/똥플러스/attribute

python attr/qa_test.py

원랜 아래와 같았지만

스토어 별 전수검사로 변경

# -*- coding: utf-8 -*-

import json

import time

import datetime as dt

import urllib3

from elasticsearch import Elasticsearch

urllib3.disable_warnings(urllib3.exceptions.InsecureRequestWarning)

def get_store_id():

with open(STOREID_FILE) as index_file:

source = index_file.read().strip()

response = client.search(index=INDEX_NAME, body=source)

store_ids = []

for val in response['aggregations']['STOREID']['buckets']:

store_ids.append(val['key'])

return store_ids

def search_data(store_id):

f_v = open("./result/prd/prd_report_" + str(now.month) +"-" + str(now.day) +"_" + now.strftime("%X") + "_" + store_id + ".txt", 'w')

with open(INDEX_FILE) as index_file:

source = index_file.read().strip()

response = client.search(index=INDEX_NAME, body=source)

f_v.write("total count : " + str(response['hits']['total']['value']) + "\n")

with open(TERM_FILE) as term_file:

ts = term_file.read().strip()

for val in response['aggregations']['KEYWORD']['buckets']:

f_v.write("\n")

f_v.write("keyword : " + val['key'] + "\n")

query = ts.replace("${keyword}", val['key']).replace("${storeId}", store_id + ",0")

ts_response = client.search(index=INDEX_NAME, body=query)

for so in ts_response['hits']['hits']:

for at in so['_source']['keywordAttrGroupList']:

for ml in at['keywordAttrMngList']:

str_line = val['key'] + " : " + at['gattrNm'] + " : " + ml['attrNm'] + "\n"

f_v.write(str_line)

f_v.close()

##### MAIN SCRIPT #####

if __name__ == '__main__':

INDEX_NAME = "keyword-attribute"

INDEX_FILE = "./sql/query.json"

TERM_FILE = "./sql/bool.json"

STOREID_FILE = "./sql/story_id_aggregation.json"

SEARCH_SIZE = 3

now = dt.datetime.now()

client = Elasticsearch("https://elastic:elastic1!@totalsearch-es.homeplus.co.kr:443/", ca_certs=False,

verify_certs=False)

store_ids = get_store_id();

print("total store count : " + str(len(store_ids)))

for store_id in store_ids:

search_data(store_id)

print(str(store_id) + str(dt.datetime.now()))

time.sleep(2)

print("Done.")

--- 이전내용 ---

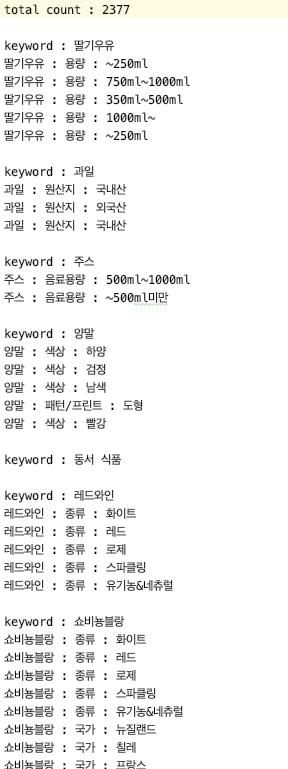

위의 디렉토리에 결과 파일이 생성되고 파일의 내용은 아래와 같음

# -*- coding: utf-8 -*-

import json

import datetime as dt

from elasticsearch import Elasticsearch

def search_data():

now = dt.datetime.now()

store_id = "106";

f_v = open("./result/qa_report_" + str(now.month) +"-" + str(now.day) +"_" + now.strftime("%X") + "_" + store_id + ".txt", 'w')

with open(INDEX_FILE) as index_file:

source = index_file.read().strip()

response = client.search(index=INDEX_NAME, body=source)

f_v.write("total count : " + str(response['hits']['total']['value']) + "\n")

with open(TERM_FILE) as term_file:

ts = term_file.read().strip()

for val in response['aggregations']['KEYWORD']['buckets']:

f_v.write("\n")

f_v.write("keyword : " + val['key'] + "\n")

query = ts.replace("${keyword}", val['key']).replace("${storeId}", store_id + ",0")

ts_response = client.search(index=INDEX_NAME, body=query)

for so in ts_response['hits']['hits']:

for at in so['_source']['keywordAttrGroupList']:

for ml in at['keywordAttrMngList']:

str_line = val['key'] + " : " + at['gattrNm'] + " : " + ml['attrNm'] + "\n"

f_v.write(str_line)

f_v.close()

##### MAIN SCRIPT #####

if __name__ == '__main__':

INDEX_NAME = "keyword-attribute"

INDEX_FILE = "./sql/query.json"

TERM_FILE = "./sql/bool.json"

SEARCH_SIZE = 3

client = Elasticsearch("https://user_id:pw@host.kr:port/", ca_certs=False,

verify_certs=False)

search_data()

print("Done.")반응형

'Python' 카테고리의 다른 글

| [python] 오탈자 교정 - SymSpellpy (0) | 2023.07.02 |

|---|---|

| [python] .txt 파일 라인 카운트 (1) | 2023.05.19 |

| [python] API 응답시간 확인 (0) | 2023.05.04 |

| [python] DB data to json file (0) | 2023.04.30 |

| [python] mysql 연동 - PyMySQL (0) | 2023.04.30 |