| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | 29 |

| 30 |

Tags

- license delete

- zip 파일 암호화

- License

- ELASTIC

- Java

- sort

- 차트

- query

- 파이썬

- Kafka

- Test

- aggs

- token filter test

- zip 암호화

- 900gle

- docker

- Mac

- licence delete curl

- matplotlib

- Elasticsearch

- flask

- plugin

- springboot

- MySQL

- API

- analyzer test

- high level client

- aggregation

- Python

- TensorFlow

Archives

- Today

- Total

개발잡부

[es8] nori analyzer 본문

반응형

nori 형태소분석기의 사전파일 테스트

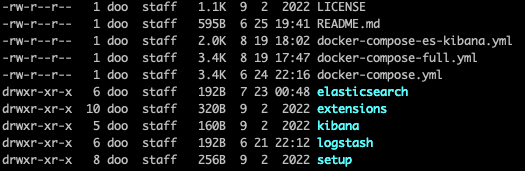

프로젝트 경로 /Users/doo/docker/es8.8.1 프로젝트를 활용할 예정

docker-compose.yml 파일을 열어보면 900gle 에서 쓰고있는 컨테이너들이 잔뜩 들어 있다.. pc 가 성능이 좋았으면

다돌려도 상관없는데.. 내껀 아니라 es, kibana 를 제거한 .yml 파일 생성

docker-compose.yml

version: '3.7'

services:

# The 'setup' service runs a one-off script which initializes the

# 'logstash_internal' and 'kibana_system' users inside Elasticsearch with the

# values of the passwords defined in the '.env' file.

#

# This task is only performed during the *initial* startup of the stack. On all

# subsequent runs, the service simply returns immediately, without performing

# any modification to existing users.

setup:

build:

context: setup/

args:

ELASTIC_VERSION: ${ELASTIC_VERSION}

init: true

volumes:

- setup:/state:Z

environment:

ELASTIC_PASSWORD: ${ELASTIC_PASSWORD:-}

LOGSTASH_INTERNAL_PASSWORD: ${LOGSTASH_INTERNAL_PASSWORD:-}

KIBANA_SYSTEM_PASSWORD: ${KIBANA_SYSTEM_PASSWORD:-}

networks:

- elk

depends_on:

- elasticsearch

elasticsearch:

build:

context: elasticsearch/

args:

ELASTIC_VERSION: ${ELASTIC_VERSION}

volumes:

- ./elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml:ro,z

- elasticsearch:/usr/share/elasticsearch/data:z

ports:

- "9200:9200"

- "9300:9300"

environment:

ES_JAVA_OPTS: -Xms512m -Xmx512m

# Bootstrap password.

# Used to initialize the keystore during the initial startup of

# Elasticsearch. Ignored on subsequent runs.

ELASTIC_PASSWORD: ${ELASTIC_PASSWORD:-}

# Use single node discovery in order to disable production mode and avoid bootstrap checks.

# see: https://www.elastic.co/guide/en/elasticsearch/reference/current/bootstrap-checks.html

discovery.type: single-node

networks:

- elk

logstash:

build:

context: logstash/

args:

ELASTIC_VERSION: ${ELASTIC_VERSION}

volumes:

- ./logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml:ro,Z

- ./logstash/pipeline:/usr/share/logstash/pipeline:ro,Z

ports:

- "5044:5044"

- "50000:50000/tcp"

- "50000:50000/udp"

- "9600:9600"

environment:

LS_JAVA_OPTS: -Xms256m -Xmx256m

LOGSTASH_INTERNAL_PASSWORD: ${LOGSTASH_INTERNAL_PASSWORD:-}

networks:

- elk

depends_on:

- elasticsearch

kibana:

build:

context: kibana/

args:

ELASTIC_VERSION: ${ELASTIC_VERSION}

volumes:

- ./kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml:ro,Z

ports:

- "5601:5601"

environment:

KIBANA_SYSTEM_PASSWORD: ${KIBANA_SYSTEM_PASSWORD:-}

networks:

- elk

depends_on:

- elasticsearch

zookeeper:

container_name: zookeeper

image: confluentinc/cp-zookeeper:latest

ports:

- "9900:2181"

environment:

ZOOKEEPER_CLIENT_PORT: 2181

ZOOKEEPER_TICK_TIME: 2000

networks:

- elk

kafka:

container_name: kafka

image: confluentinc/cp-kafka:latest

depends_on:

- zookeeper

ports:

- "9092:9092"

environment:

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka:29092,PLAINTEXT_HOST://localhost:9092

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

KAFKA_CREATE_TOPICS: "5amsung:1:1"

volumes:

- /var/run/docker.sock:/var/run/docker.sock

networks:

- elk

networks:

elk:

driver: bridge

volumes:

setup:

elasticsearch:

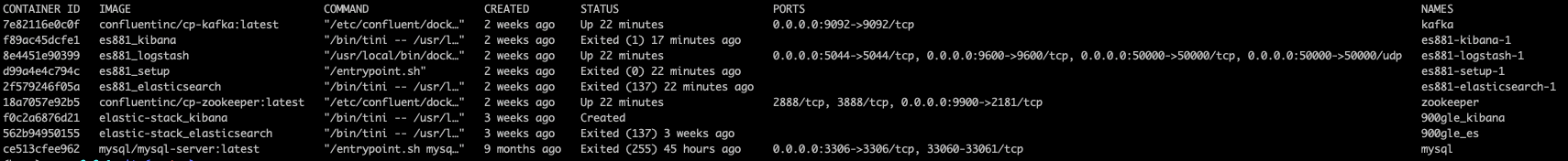

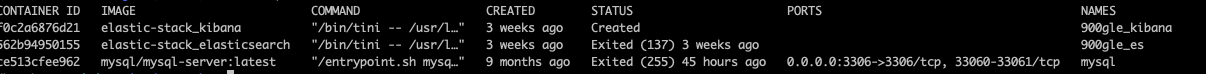

컨테이너 리스트 확인

docker ps -a

뭔가 많이 있다.

관련된것들 삭제하고 다시 테스트

docker compose down

es 와 kibana만 남긴 docker-compose-es-kibana.yml 파일 생성

실행해보자 (-f 옵션사용)

docker compose -f docker-compose-es-kibana.yml up -d --build첫구매쿠폰 테스트

GET _analyze

{

"tokenizer": "nori_tokenizer",

"text": [

"첫구매쿠폰"

]

}

{

"tokens": [

{

"token": "첫",

"start_offset": 0,

"end_offset": 1,

"type": "word",

"position": 0

},

{

"token": "구매",

"start_offset": 1,

"end_offset": 3,

"type": "word",

"position": 1

},

{

"token": "쿠폰",

"start_offset": 3,

"end_offset": 5,

"type": "word",

"position": 2

}

]

}- user_dictionary : 사용자 사전이 저장된 파일의 경로를 입력합니다.

- user_dictionary_rules : 사용자 정의 사전을 배열로 입력합니다.

- decompound_mode : 합성어의 저장 방식을 결정합니다. 다음 3개의 값을 사용 가능합니다.

- none : 어근을 분리하지 않고 완성된 합성어만 저장합니다.

- discard (디폴트) : 합성어를 분리하여 각 어근만 저장합니다.

- mixed : 어근과 합성어를 모두 저장합니다.

토크나이저

- nori_tokenizer

토큰 필터

- nori_part_of_speech

- nori_readingform

user_dictionary.txt 에 첫구매 추가

doo-nori-totenizer 가 user dic 을 보고 있고 decompound_mode 는 mixed 로 되어있다.

근데 첫구매 하나만 등록했으니 mixed 여도 첫구매 로 분해됨

"tokenizer": {

"doo-nori-tokenizer": {

"type": "nori_tokenizer",

"user_dictionary": "user_dictionary.txt",

"decompound_mode": "mixed"

}

}GET test_indexer/_analyze

{

"tokenizer": "doo-nori-tokenizer",

"text": [

"첫구매쿠폰"

]

}

결과

{

"tokens": [

{

"token": "첫구매",

"start_offset": 0,

"end_offset": 3,

"type": "word",

"position": 0

},

{

"token": "쿠폰",

"start_offset": 3,

"end_offset": 5,

"type": "word",

"position": 1

}

]

}

반응형

'ElasticStack8 > Elasticsearch' 카테고리의 다른 글

| [es] Elasticsearch data node 의 shard 정보 (0) | 2024.07.31 |

|---|---|

| [es] analyzer, token filter test (0) | 2023.08.29 |

| [es8] HighLevelClient, LowLevelClient (0) | 2023.08.06 |

| [es8] aggregation - Pipeline Aggregations (0) | 2023.06.14 |

| [es8] elasticsearch stable-esplugin (0) | 2023.05.21 |