| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | ||||

| 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| 11 | 12 | 13 | 14 | 15 | 16 | 17 |

| 18 | 19 | 20 | 21 | 22 | 23 | 24 |

| 25 | 26 | 27 | 28 | 29 | 30 | 31 |

Tags

- plugin

- aggs

- matplotlib

- Test

- MySQL

- token filter test

- zip 암호화

- springboot

- analyzer test

- flask

- 900gle

- query

- Java

- 차트

- licence delete curl

- Elasticsearch

- Python

- sort

- License

- license delete

- docker

- high level client

- API

- 파이썬

- ELASTIC

- Mac

- Kafka

- aggregation

- TensorFlow

- zip 파일 암호화

Archives

- Today

- Total

개발잡부

[tensorflow 2] sentence encoder A/B test 본문

반응형

상품명에서 백터를 추출 하여

embed_a = hub.load("https://tfhub.dev/google/universal-sentence-encoder/4")

embed_b = hub.load("https://tfhub.dev/google/universal-sentence-encoder-multilingual/3")a 와 b 를 비교해보자

# -*- coding: utf-8 -*-

import json

from elasticsearch import Elasticsearch

from elasticsearch.helpers import bulk

import tensorflow_hub as hub

import tensorflow_text

import kss, numpy

##### INDEXING #####

def index_data():

print("Creating the '" + INDEX_NAME_A + "' index.")

print("Creating the '" + INDEX_NAME_B + "' index.")

client.indices.delete(index=INDEX_NAME_A, ignore=[404])

client.indices.delete(index=INDEX_NAME_A, ignore=[404])

with open(INDEX_FILE) as index_file:

source = index_file.read().strip()

client.indices.create(index=INDEX_NAME_A, body=source)

client.indices.create(index=INDEX_NAME_B, body=source)

count = 0

docs = []

with open(DATA_FILE) as data_file:

for line in data_file:

line = line.strip()

json_data = json.loads(line)

docs.append(json_data)

count += 1

if count % BATCH_SIZE == 0:

index_batch_a(docs)

index_batch_b(docs)

docs = []

print("Indexed {} documents.".format(count))

if docs:

index_batch_a(docs)

index_batch_b(docs)

print("Indexed {} documents.".format(count))

client.indices.refresh(index=INDEX_NAME_A)

client.indices.refresh(index=INDEX_NAME_B)

print("Done indexing.")

def paragraph_index(paragraph):

# 문장단위 분리

avg_paragraph_vec = numpy.zeros((1, 512))

sent_count = 0

for sent in kss.split_sentences(paragraph[0:100]):

# 문장을 embed 하기

# vector들을 평균으로 더해주기

avg_paragraph_vec += embed_text([sent])

sent_count += 1

avg_paragraph_vec /= sent_count

return avg_paragraph_vec.ravel(order='C')

def index_batch_a(docs):

name = [doc["name"] for doc in docs]

name_vectors = embed_text_a(name)

requests = []

for i, doc in enumerate(docs):

request = doc

request["_op_type"] = "index"

request["_index"] = INDEX_NAME_A

request["name_vector"] = name_vectors[i]

requests.append(request)

bulk(client, requests)

def index_batch_b(docs):

name = [doc["name"] for doc in docs]

name_vectors = embed_text_b(name)

requests = []

for i, doc in enumerate(docs):

request = doc

request["_op_type"] = "index"

request["_index"] = INDEX_NAME_B

request["name_vector"] = name_vectors[i]

requests.append(request)

bulk(client, requests)

##### EMBEDDING #####

def embed_text_a(input):

vectors = embed_a(input)

return [vector.numpy().tolist() for vector in vectors]

def embed_text_b(input):

vectors = embed_b(input)

return [vector.numpy().tolist() for vector in vectors]

##### MAIN SCRIPT #####

if __name__ == '__main__':

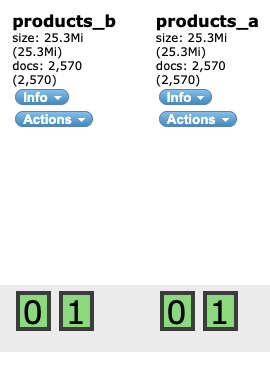

INDEX_NAME_A = "products_a"

INDEX_NAME_B = "products_b"

INDEX_FILE = "./data/products/index.json"

DATA_FILE = "./data/products/products.json"

BATCH_SIZE = 100

SEARCH_SIZE = 3

print("Downloading pre-trained embeddings from tensorflow hub...")

embed_a = hub.load("https://tfhub.dev/google/universal-sentence-encoder/4")

embed_b = hub.load("https://tfhub.dev/google/universal-sentence-encoder-multilingual/3")

client = Elasticsearch(http_auth=('elastic', 'dlengus'))

index_data()

print("Done.")

category 정보 추가 추출 -

카테고리 정보를 추가한 index

index.json 파일에 카테고리 정보 추가

{

"settings": {

"number_of_shards": 2,

"number_of_replicas": 0

},

"mappings": {

"dynamic": "true",

"_source": {

"enabled": "true"

},

"properties": {

"name": {

"type": "text"

},

"name_vector": {

"type": "dense_vector",

"dims": 512

},

"price": {

"type": "keyword"

},

"id": {

"type": "keyword"

},

"category": {

"type": "text"

}

}

}

}

# -*- coding: utf-8 -*-

import time

import json

from elasticsearch import Elasticsearch

from elasticsearch.helpers import bulk

import tensorflow_hub as hub

import tensorflow_text

import kss, numpy

##### SEARCHING #####

def run_query_loop():

while True:

try:

handle_query()

except KeyboardInterrupt:

return

def handle_query():

query = input("Enter query: ")

embedding_start = time.time()

query_vector_a = embed_text_a([query])[0]

query_vector_b = embed_text_b([query])[0]

embedding_time = time.time() - embedding_start

script_query_a = {

"script_score": {

"query": {"match_all": {}},

"script": {

"source": "cosineSimilarity(params.query_vector, doc['name_vector']) + 1.0",

"params": {"query_vector": query_vector_a}

}

}

}

script_query_b = {

"script_score": {

"query": {"match_all": {}},

"script": {

"source": "cosineSimilarity(params.query_vector, doc['name_vector']) + 1.0",

"params": {"query_vector": query_vector_b}

}

}

}

search_start = time.time()

response_a = client.search(

index=INDEX_NAME_A,

body={

"size": SEARCH_SIZE,

"query": script_query_a,

"_source": {"includes": ["name", "price"]}

}

)

response_b = client.search(

index=INDEX_NAME_B,

body={

"size": SEARCH_SIZE,

"query": script_query_b,

"_source": {"includes": ["name", "price"]}

}

)

search_time = time.time() - search_start

print()

print("{} total hits.".format(response_a["hits"]["total"]["value"]))

print("embedding time: {:.2f} ms".format(embedding_time * 1000))

print("search time: {:.2f} ms".format(search_time * 1000))

for hit in response_a["hits"]["hits"]:

print("id: {}, score: {}".format(hit["_id"], hit["_score"]))

print(hit["_source"])

print()

print()

print("{} total hits.".format(response_b["hits"]["total"]["value"]))

print("embedding time: {:.2f} ms".format(embedding_time * 1000))

print("search time: {:.2f} ms".format(search_time * 1000))

for hit in response_b["hits"]["hits"]:

print("id: {}, score: {}".format(hit["_id"], hit["_score"]))

print(hit["_source"])

print()

##### INDEXING #####

def index_data():

print("Creating the '" + INDEX_NAME_A + "' index.")

print("Creating the '" + INDEX_NAME_B + "' index.")

client.indices.delete(index=INDEX_NAME_A, ignore=[404])

client.indices.delete(index=INDEX_NAME_A, ignore=[404])

with open(INDEX_FILE) as index_file:

source = index_file.read().strip()

client.indices.create(index=INDEX_NAME_A, body=source)

client.indices.create(index=INDEX_NAME_B, body=source)

count = 0

docs = []

with open(DATA_FILE) as data_file:

for line in data_file:

line = line.strip()

json_data = json.loads(line)

docs.append(json_data)

count += 1

if count % BATCH_SIZE == 0:

index_batch_a(docs)

index_batch_b(docs)

docs = []

print("Indexed {} documents.".format(count))

if docs:

index_batch_a(docs)

index_batch_b(docs)

print("Indexed {} documents.".format(count))

client.indices.refresh(index=INDEX_NAME_A)

client.indices.refresh(index=INDEX_NAME_B)

print("Done indexing.")

def paragraph_index(paragraph):

# 문장단위 분리

avg_paragraph_vec = numpy.zeros((1, 512))

sent_count = 0

for sent in kss.split_sentences(paragraph[0:100]):

# 문장을 embed 하기

# vector들을 평균으로 더해주기

avg_paragraph_vec += embed_text([sent])

sent_count += 1

avg_paragraph_vec /= sent_count

return avg_paragraph_vec.ravel(order='C')

def index_batch_a(docs):

name = [doc["name"] for doc in docs]

name_vectors = embed_text_a(name)

requests = []

for i, doc in enumerate(docs):

request = doc

request["_op_type"] = "index"

request["_index"] = INDEX_NAME_A

request["name_vector"] = name_vectors[i]

requests.append(request)

bulk(client, requests)

def index_batch_b(docs):

name = [doc["name"] for doc in docs]

name_vectors = embed_text_b(name)

requests = []

for i, doc in enumerate(docs):

request = doc

request["_op_type"] = "index"

request["_index"] = INDEX_NAME_B

request["name_vector"] = name_vectors[i]

requests.append(request)

bulk(client, requests)

##### EMBEDDING #####

def embed_text_a(input):

vectors = embed_a(input)

return [vector.numpy().tolist() for vector in vectors]

def embed_text_b(input):

vectors = embed_b(input)

return [vector.numpy().tolist() for vector in vectors]

##### MAIN SCRIPT #####

if __name__ == '__main__':

INDEX_NAME_A = "products_a"

INDEX_NAME_B = "products_b"

INDEX_FILE = "./data/products/index.json"

DATA_FILE = "./data/products/products.json"

BATCH_SIZE = 100

SEARCH_SIZE = 3

print("Downloading pre-trained embeddings from tensorflow hub...")

embed_a = hub.load("https://tfhub.dev/google/universal-sentence-encoder/4")

embed_b = hub.load("https://tfhub.dev/google/universal-sentence-encoder-multilingual/3")

client = Elasticsearch(http_auth=('elastic', 'dlengus'))

run_query_loop()

print("Done.")

검색어 : 아이폰

CASE A :

id: 28GcUX4BAf0FcTmqzQtE, score: 1.5204558

고급오메가호차-30mm

id: tMGcUX4BAf0FcTmqzQtE, score: 1.501778

강력한스핀형엑시옴오메가4아시아탁구러버레드

id: d8GcUX4BAf0FcTmqvgJd, score: 1.3692374

나이키 BV6887-010

CASE B :

id: asGcUX4BAf0FcTmq0Q6g, score: 1.5843368

Apple 아이폰 SE2 128GB (LG U+)

id: X8GcUX4BAf0FcTmq0Q6g, score: 1.560456

Apple 아이폰 SE2 256GB (KT)

id: bMGcUX4BAf0FcTmq0Q6g, score: 1.5571916

Apple 아이폰 11 Pro 64GB [LG U+]

A 모델 버려..

반응형

'Python > text embeddings' 카테고리의 다른 글

| [tensorflow 2] Text embedding A/B TEST - 1 (0) | 2022.01.14 |

|---|---|

| [tensorflow 2]Universal-sentence-encoder-multilingual-large (0) | 2022.01.13 |

| [tensorflow 2] Universal-sentence-encoder-multilingual 2 (0) | 2022.01.12 |

| [tensorflow 2] universal-sentence-encoder-multilingual (0) | 2022.01.11 |

| [tensorflow 2] tf-embeddings 한글버전 (0) | 2021.12.24 |

Comments