| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| 13 | 14 | 15 | 16 | 17 | 18 | 19 |

| 20 | 21 | 22 | 23 | 24 | 25 | 26 |

| 27 | 28 | 29 | 30 |

Tags

- zip 파일 암호화

- query

- 차트

- high level client

- Test

- springboot

- MySQL

- flask

- Elasticsearch

- license delete

- 파이썬

- Python

- TensorFlow

- API

- 900gle

- licence delete curl

- aggregation

- plugin

- token filter test

- zip 암호화

- matplotlib

- ELASTIC

- License

- aggs

- Java

- sort

- Mac

- docker

- analyzer test

- Kafka

Archives

- Today

- Total

개발잡부

[es] aggregation test 4 본문

반응형

import json

import time

from elasticsearch import Elasticsearch

from elasticsearch.helpers import bulk

from ssl import create_default_context

import matplotlib.pyplot as plt

from matplotlib.collections import EventCollection

import numpy as np

plt.rcParams['font.family'] = 'AppleGothic'

def get_query(keyword):

script_query = {

"bool": {

"filter": [

{

"range": {

"saleStartDt": {

"to": "now/m",

"include_lower": "true",

"include_upper": "true",

"boost": 1

}

}

},

{

"range": {

"saleEndDt": {

"from": "now/m",

"include_lower": "true",

"include_upper": "true",

"boost": 1

}

}

},

{

"term": {

"docDispYn": {

"value": "Y",

"boost": 1

}

}

},

{

"bool": {

"should": [

{

"terms": {

"itemStoreInfo.storeId": [

"37",

"20163"

],

"boost": 1

}

},

{

"term": {

"shipMethod": {

"value": "TD_DLV",

"boost": 1

}

}

},

{

"term": {

"storeType": {

"value": "DS",

"boost": 1

}

}

}

],

"adjust_pure_negative": "true",

"boost": 1

}

},

{

"multi_match": {

"query": keyword,

"fields": [

"brandNmEng^1.0",

"brandNmKor^1.0",

"category.categorySearchKeyword^1.0",

"category.dcateNm^1.0",

"isbn^1.0",

"itemNo^1.0",

"itemOptionNms^1.0",

"itemStoreInfo.eventInfo.eventKeyword^1.0",

"searchItemNm^1.0",

"searchKeyword^1.0"

],

"type": "cross_fields",

"operator": "AND",

"slop": 0,

"prefix_length": 0,

"max_expansions": 50,

"zero_terms_query": "NONE",

"auto_generate_synonyms_phrase_query": "false",

"fuzzy_transpositions": "true",

"boost": 1

}

}

],

"adjust_pure_negative": "true",

"boost": 1

}

}

return script_query

##### SEARCHING #####

def handle_query():

embedding_start = time.time()

embedding_time = time.time() - embedding_start

aggregations_a = {

"LCATE": {

"terms": {

"field": "filterInfo.lcate",

"size": 500,

"min_doc_count": 1,

"shard_min_doc_count": 0,

"show_term_doc_count_error": "false",

"order": [

{

"_count": "desc"

},

{

"LCATE_ORDER": "asc"

},

{

"_key": "asc"

}

]

},

"aggregations": {

"LCATE_ORDER": {

"min": {

"field": "category.lcatePriority"

}

}

}

},

"PARTNER": {

"terms": {

"field": "filterInfo.shop",

"size": 20,

"min_doc_count": 1,

"shard_min_doc_count": 0,

"show_term_doc_count_error": "false",

"order": [

{

"_count": "desc"

},

{

"_key": "asc"

}

]

}

},

"BRAND": {

"terms": {

"field": "filterInfo.brand",

"size": 20,

"min_doc_count": 1,

"shard_min_doc_count": 0,

"show_term_doc_count_error": "false",

"order": [

{

"_count": "desc"

},

{

"_key": "asc"

}

]

}

},

"MALL_TYPE": {

"terms": {

"field": "mallType",

"size": 10,

"min_doc_count": 1,

"shard_min_doc_count": 0,

"show_term_doc_count_error": "false",

"order": [

{

"_count": "desc"

},

{

"_key": "asc"

}

]

}

},

"BENEFIT": {

"terms": {

"field": "filterInfo.benefit",

"size": 10,

"min_doc_count": 1,

"shard_min_doc_count": 0,

"show_term_doc_count_error": "false",

"order": [

{

"_count": "desc"

},

{

"_key": "asc"

}

]

}

},

"GRADE": {

"range": {

"field": "grade",

"ranges": [

{

"to": 1

},

{

"from": 1,

"to": 2

},

{

"from": 2,

"to": 3

},

{

"from": 3,

"to": 4

},

{

"from": 4,

"to": 5

},

{

"from": 5

}

],

"keyed": "false"

}

}

}

aggregations_n = {

"LCATE": {

"terms": {

"field": "filterInfo.lcate",

"size": 500,

"min_doc_count": 1,

"shard_min_doc_count": 0,

"show_term_doc_count_error": "false",

"order": [

{

"_count": "desc"

},

{

"LCATE_ORDER": "asc"

},

{

"_key": "asc"

}

]

},

"aggregations": {

"LCATE_ORDER": {

"min": {

"field": "category.lcatePriority"

}

}

}

},

"PARTNER": {

"terms": {

"field": "filterInfo.shop",

"size": 20,

"min_doc_count": 1,

"shard_min_doc_count": 0,

"show_term_doc_count_error": "false",

"order": [

{

"_count": "desc"

},

{

"_key": "asc"

}

]

}

},

"BRAND": {

"terms": {

"field": "filterInfo.brand",

"size": 20,

"min_doc_count": 1,

"shard_min_doc_count": 0,

"show_term_doc_count_error": "false",

"order": [

{

"_count": "desc"

},

{

"_key": "asc"

}

]

}

},

"MALL_TYPE": {

"terms": {

"field": "mallType",

"size": 10,

"min_doc_count": 1,

"shard_min_doc_count": 0,

"show_term_doc_count_error": "false",

"order": [

{

"_count": "desc"

},

{

"_key": "asc"

}

]

}

},

"BENEFITS": {

"nested": {

"path": "benefits"

},

"aggregations": {

"BENEFITS_BENEFIT": {

"terms": {

"field": "benefits.benefit",

"size": 10,

"min_doc_count": 1,

"shard_min_doc_count": 0,

"show_term_doc_count_error": "false",

"order": [

{

"_count": "desc"

},

{

"_key": "asc"

}

]

}

}

}

},

"GRADE": {

"range": {

"field": "grade",

"ranges": [

{

"to": 1

},

{

"from": 1,

"to": 2

},

{

"from": 2,

"to": 3

},

{

"from": 3,

"to": 4

},

{

"from": 4,

"to": 5

},

{

"from": 5

}

],

"keyed": "false"

}

}

}

data_a = []

data_n = []

keywords1 = ['홈밀','우유','계란','수박','두부','과자','생수','1+1','라면','콩나물','아이스크림','양파','오이','치즈','버섯','상추','요거트','쌀','바나나','복숭아','홈밀','우유','계란','수박','두부','생수','과자','1+1','라면','콩나물','아이스크림','양파','오이','치즈','버섯','상추','쌀','복숭아','요거트','바나나']

keywords2 = ['홈밀','우유','계란','수박','두부','과자','생수','1+1','라면','콩나물','아이스크림','양파','오이','치즈','버섯','상추','요거트','쌀','바나나','복숭아','홈밀','우유','계란','수박','두부','생수','과자','1+1','라면','콩나물','아이스크림','양파','오이','치즈','버섯','상추','쌀','복숭아','요거트','바나나']

keywords3 = ['오징어','칠면조','스파게티','홈프러스','투게더','치킨','초밥','시그램']

keywords = keywords1 + keywords2 + keywords3

for key in keywords:

search_start_a = time.time()

response_a = client.search(

index=ARRAY_INDEX_NAME,

body={

"size": SEARCH_SIZE,

"query": get_query(key),

"aggregations": aggregations_a

}

)

search_time_a = time.time() - search_start_a

data_a.append(round(search_time_a * 1000, 2))

# print (response_a['aggregations'])

# print (response_a['aggregations']['PARTNER'])

# if (len(response_a['aggregations']['BENEFIT']) > 0) :

# print (response_a['aggregations']['BENEFIT'])

# print (response_a['aggregations']['LCATE'])

# print (response_a['aggregations']['BRAND'])

# print (response_a['aggregations']['GRADE'])

# print (response_a['aggregations']['MALL_TYPE'])

for key in keywords:

search_start_n = time.time()

response_n = client.search(

index=NESTED_INDEX_NAME,

body={

"size": SEARCH_SIZE,

"query": get_query(key),

"aggregations": aggregations_n

}

)

search_time_n = time.time() - search_start_n

data_n.append(round(search_time_n * 1000, 2))

print()

print("{} total hits.".format(response_a["hits"]["total"]["value"]))

print("{} total hits.".format(response_n["hits"]["total"]["value"]))

print("search time_a: {:.2f} ms".format(search_time_a * 1000))

print("search time_n: {:.2f} ms".format(search_time_n * 1000))

print (data_a)

print (data_n)

# 평균 구하기

average_a = np.mean(data_a)

average_n = np.mean(data_n)

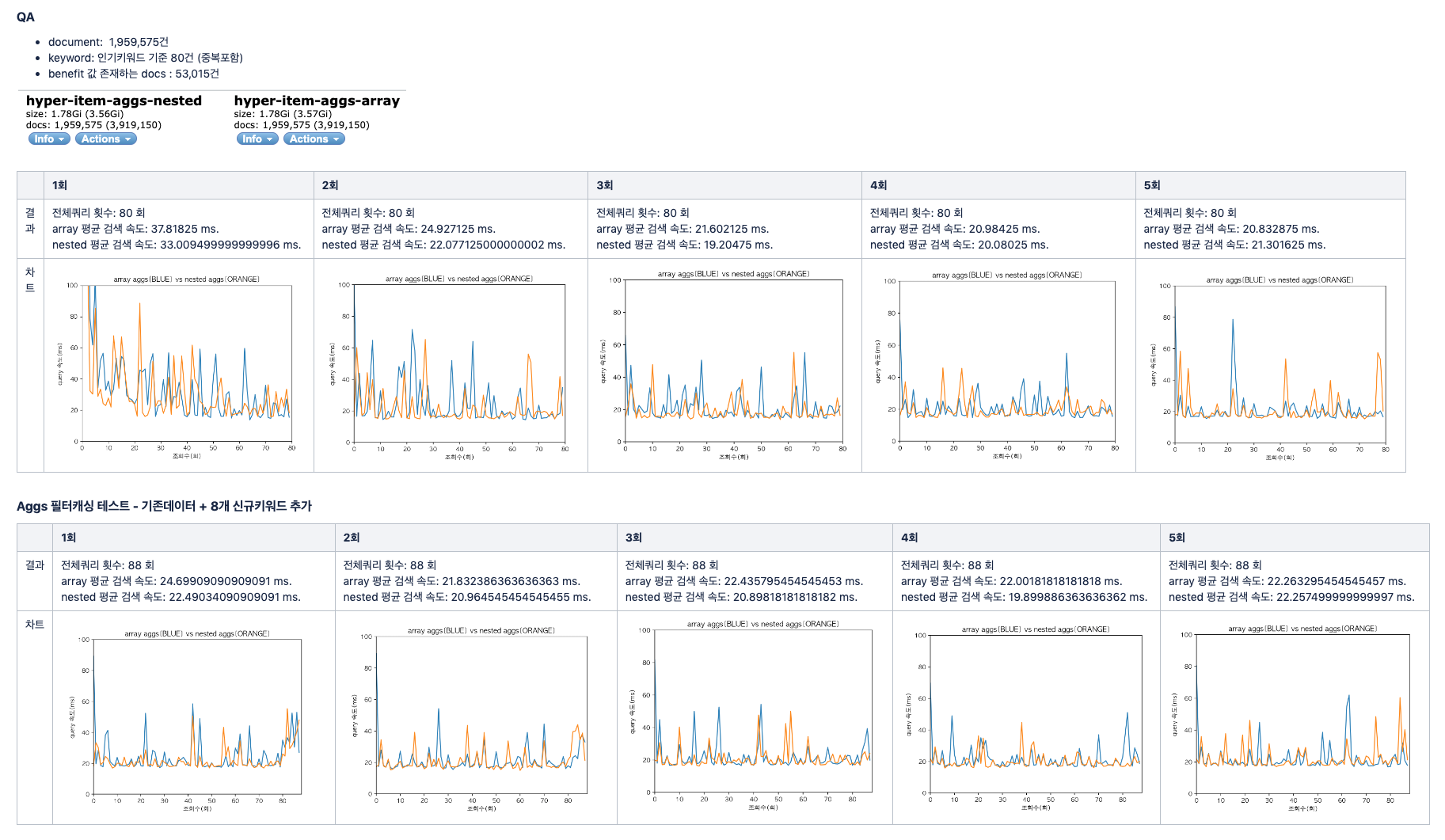

print("전체쿼리 횟수: {} 회".format(len(keywords)))

print("array 평균 검색 속도: {} ms.".format(average_a))

print("nested 평균 검색 속도: {} ms.".format(average_n))

xdata = range(len(keywords))

# create some y data points

ydata1 = data_a

ydata2 = data_n

# plot the data

fig = plt.figure()

ax = fig.add_subplot(1, 1, 1)

ax.plot(xdata, ydata1, color='tab:blue')

ax.plot(xdata, ydata2, color='tab:orange')

ax.set_ylabel('query 속도(ms)')

ax.set_xlabel('조회수(회)')

# create the events marking the x data points

xevents1 = EventCollection(xdata, color='tab:blue', linelength=0.05)

xevents2 = EventCollection(xdata, color='tab:orange', linelength=0.05)

# create the events marking the y data points

yevents1 = EventCollection(ydata1, color='tab:blue', linelength=0.05,

orientation='vertical')

yevents2 = EventCollection(ydata2, color='tab:orange', linelength=0.05,

orientation='vertical')

# add the events to the axis

ax.add_collection(xevents1)

ax.add_collection(xevents2)

ax.add_collection(yevents1)

ax.add_collection(yevents2)

# set the limits

ax.set_xlim([0, len(xdata)])

ax.set_ylim([0, 100])

ax.set_title('array aggs[BLUE] vs nested aggs[ORANGE]')

# display the plot

plt.show()

##### MAIN SCRIPT #####

if __name__ == '__main__':

ARRAY_INDEX_NAME = "hyper-item-aggs-array"

NESTED_INDEX_NAME = "hyper-item-aggs-nested"

SEARCH_SIZE = 0

client = Elasticsearch("https://elastic:dlengus@localhost:443/", ca_certs=False,

verify_certs=False)

print("start")

handle_query()

반응형

'ElasticStack > Elasticsearch' 카테고리의 다른 글

| [es] ESSingleNodeTestCase (0) | 2022.08.14 |

|---|---|

| [es] sort - payload sort 3 (0) | 2022.08.11 |

| [es] _update_by_query (0) | 2022.07.20 |

| [es] aggregation test 3 (0) | 2022.07.19 |

| [es] aggregation test 2 (0) | 2022.07.17 |

Comments