| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | ||||

| 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| 11 | 12 | 13 | 14 | 15 | 16 | 17 |

| 18 | 19 | 20 | 21 | 22 | 23 | 24 |

| 25 | 26 | 27 | 28 | 29 | 30 | 31 |

Tags

- MySQL

- docker

- high level client

- Kafka

- analyzer test

- aggregation

- zip 암호화

- Test

- springboot

- API

- Elasticsearch

- Java

- flask

- licence delete curl

- zip 파일 암호화

- 차트

- plugin

- TensorFlow

- token filter test

- Mac

- ELASTIC

- 900gle

- Python

- license delete

- 파이썬

- query

- sort

- aggs

- License

- matplotlib

Archives

- Today

- Total

개발잡부

[tf] 11. RNN 본문

반응형

!pip install -q -U tensorflow

!pip install -q tensorflow_datasetsimport numpy as np

import tensorflow_datasets as tfds

import tensorflow as tf

tfds.disable_progress_bar()import matplotlib.pyplot as plt

def plot_graphs(history, metric):

plt.plot(history.history[metric])

plt.plot(history.history['val_'+metric], '')

plt.xlabel("Epochs")

plt.ylabel(metric)

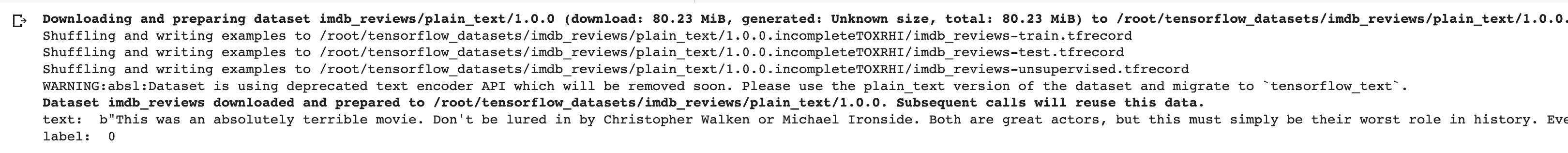

plt.legend([metric, 'val_'+ metric])dataset, info = tfds.load('imdb_reviews', with_info=True, as_supervised=True)

train_dataset, test_dataset = dataset['train'], dataset['test']

for example, label in train_dataset.take(1):

print('text: ', example.numpy() )

print('label: ', label.numpy())

BUFFER_SIZE = 10000

BATCH_SIZE = 64

train_dataset = train_dataset.shuffle(BUFFER_SIZE).batch(BATCH_SIZE).prefetch(tf.data.AUTOTUNE)

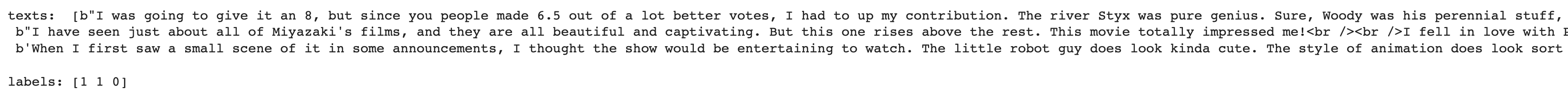

test_dataset = test_dataset.batch(BATCH_SIZE).prefetch(tf.data.AUTOTUNE)for example, label in train_dataset.take(1):

print('texts: ', example.numpy()[:3])

print()

print('labels:', label.numpy()[:3])

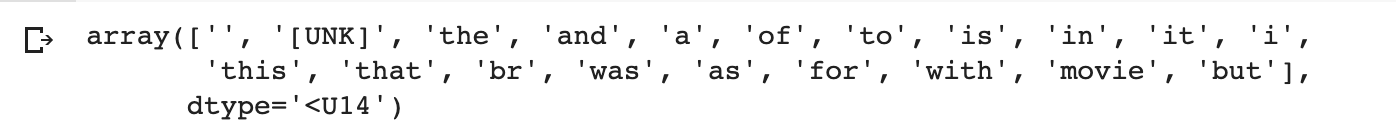

VOCAB_SIZE=1000

encoder = tf.keras.layers.experimental.preprocessing.TextVectorization(

max_tokens=VOCAB_SIZE)

encoder.adapt(train_dataset.map(lambda text, label: text))

vocab = np.array(encoder.get_vocabulary())

vocab[:20]

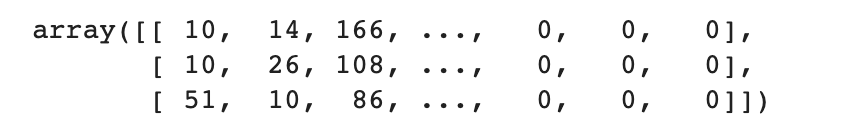

encoded_example = encoder(example)[:3].numpy()

encoded_example

tf.keras.layers.LSTM(units, activation='tanh', recurrent_activation='sigmoid',

use_bias=True, kernel_initializer='glorot_uniform',

recurrent_initializer='orthogonal',

bias_initializer='zeros', unit_forget_bias=True,

kernel_regularizer=None, recurrent_regularizer=None, bias_regularizer=None,

activity_regularizer=None, kernel_constraint=None, recurrent_constraint=None,

bias_constraint=None, dropout=0.0, recurrent_dropout=0.0, return_sequences=False,

return_state=False, go_backwards=False, stateful=False,

time_major=False, unroll=False, **kwargs)model = tf.keras.Sequential([

encoder,

tf.keras.layers.Embedding(

input_dim=len(encoder.get_vocabulary()),

output_dim=64,

mask_zero=True),

#tf.keras.layers.LSTM(64),

tf.keras.layers.Bidirectional(tf.keras.layers.RNN(tf.keras.layers.LSTMCell(64))),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(1)

])model.compile(loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

optimizer=tf.keras.optimizers.Adam(1e-4),

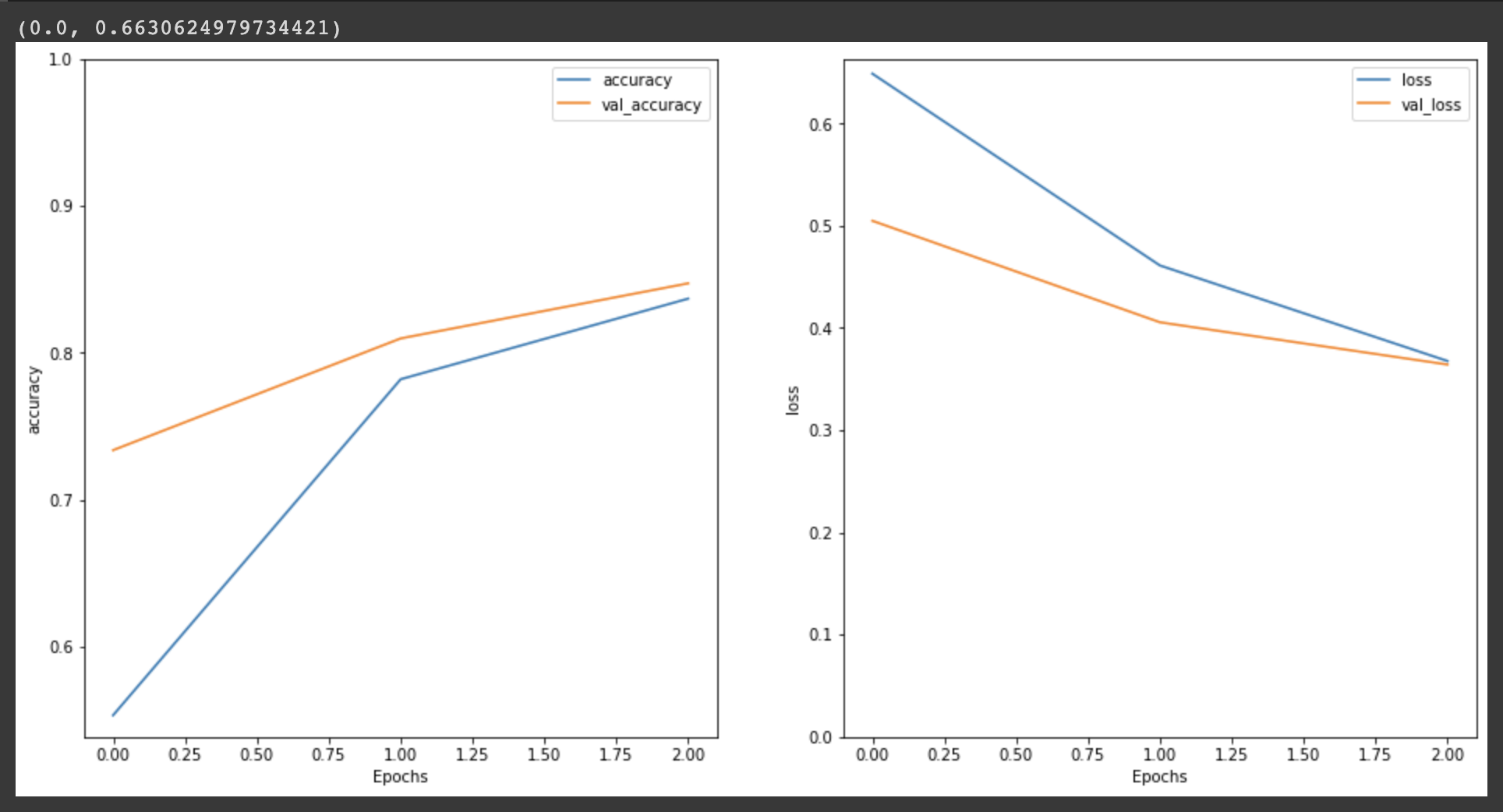

metrics=['accuracy'])history = model.fit(train_dataset, epochs=10, validation_data=test_dataset, validation_steps=30)test_loss, test_acc = model.evaluate(test_dataset)plt.figure(figsize=(16,8))

plt.subplot(1,2,1)

plot_graphs(history, 'accuracy')

plt.ylim(None,1)

plt.subplot(1,2,2)

plot_graphs(history, 'loss')

plt.ylim(0,None)

반응형

'강좌' 카테고리의 다른 글

| [cnn] Residual block (0) | 2022.08.07 |

|---|---|

| [tf] 3. Convolution Layer (0) | 2022.07.31 |

| 선형회귀 (0) | 2022.05.30 |

| 12.Multi-Classification 실습 (0) | 2022.05.14 |

| google colab (0) | 2022.03.24 |

Comments